Interaction Framework

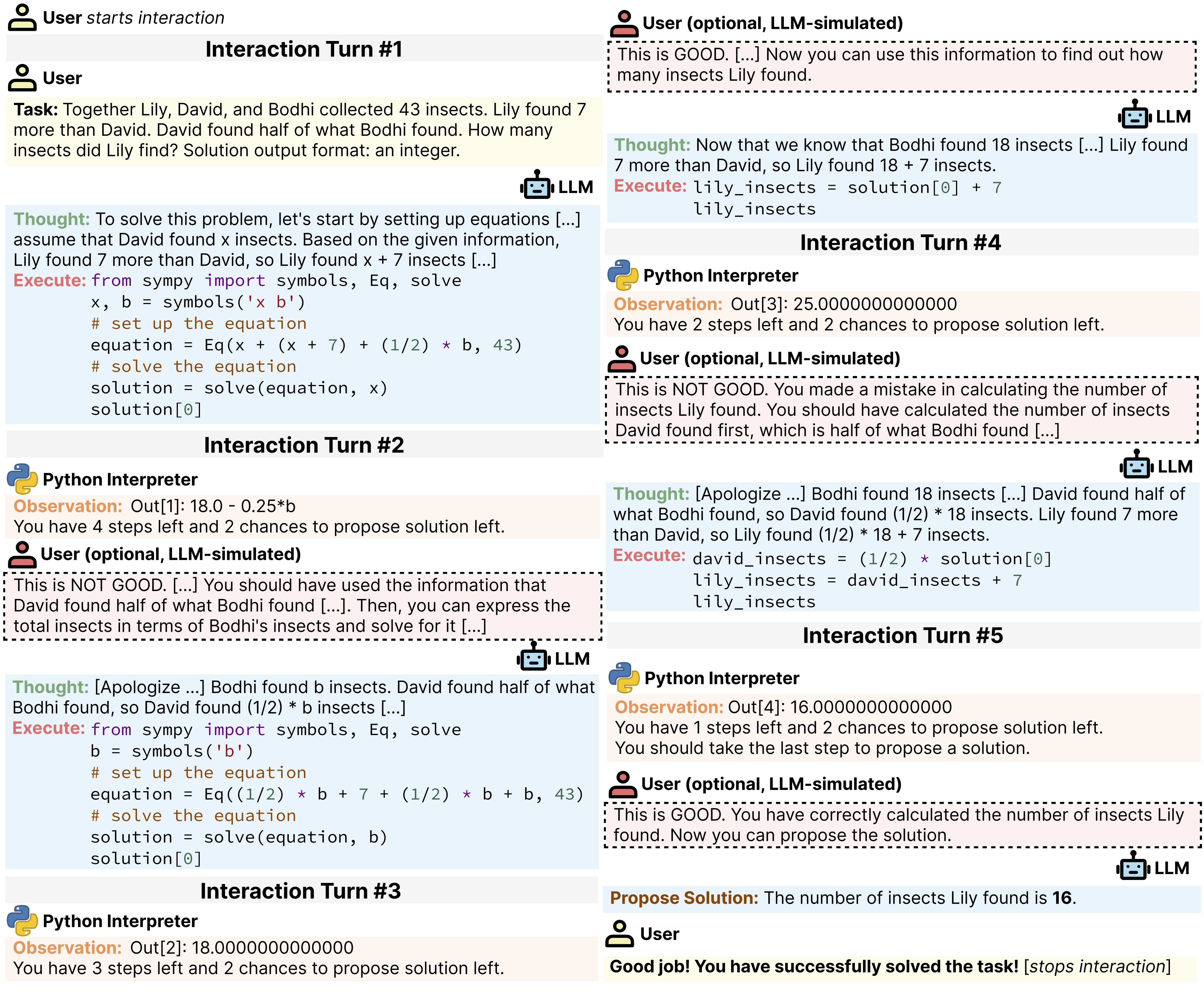

MINT mirrors the real-world User-LLM-Tool collaborative problem-solving setting. To solve a problem, the LLM can (1) use external tools by generating and executing Python programs and/or (2) collecting natural language feedback to refine its solutions; the feedback is provided by GPT-4, aiming to simulate human users in a reproducible and scalable way.

- We measure LLMs' tool-augmented task-solving capability by analyzing its performance gain with increased numbers of turns without language feedback (i.e., no red dotted box in the figure below).

- We quantify LLMs' ability to leverage natural language feedback with the performance gain upon receiving GPT-4 generated feedback (i.e., performance without and with red dotted box in the figure below).