A new benchmark tailored for LLMs' multi-turn interactions

We often interact with Large Language Models (LLMs) like ChatGPT in multi-turn dialogues, yet we predominantly evaluate them with single-turn benchmarks. Bridging this gap, we introduce MINT, a new benchmark tailored for LLMs’ multi-turn interactions. 🧵

MINT mirrors real-world user-LLM-tool interactions to evaluate two key LLM multi-turn capabilities:

1️. Tool-augmented problem-solving

2️. Ability to leverage natural language feedback

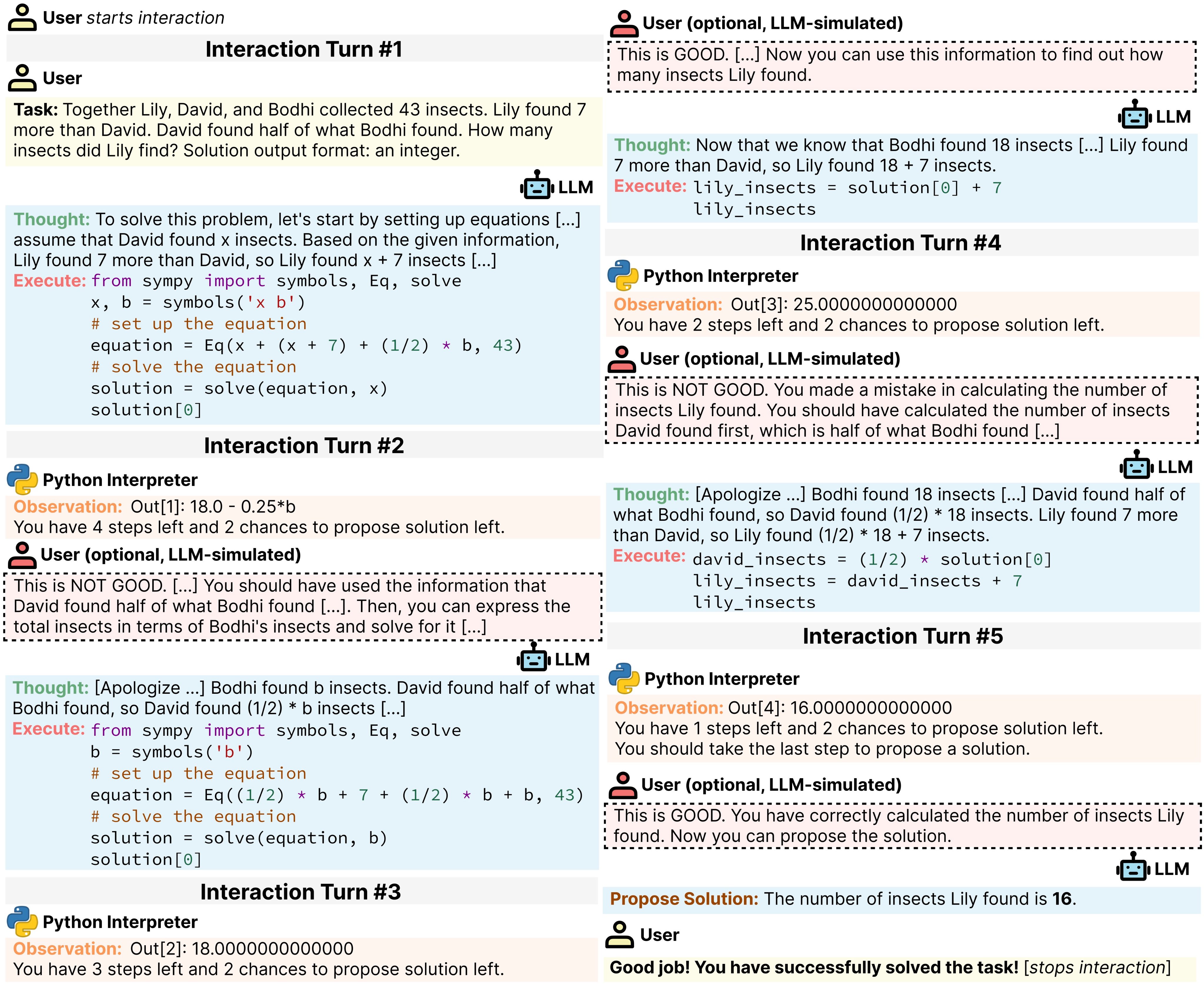

To solve a problem, the LLM can (1) use external tools via Python programs (‘Execute’ in the figure) and/or (2) collect natural language feedback to refine its solutions (Red dotted box in the figure); the feedback is provided by GPT-4, aiming to simulate a human user.

1️⃣ For tool-augmented task-solving, we analyze how performance improves as the number of interaction turns with tools increases, all without language feedback (refer to the above figure without the red dotted box).

2️⃣ To assess the ability to leverage natural language feedback, we measure the performance gain when LLMs receive natural language feedback from GPT-4 (compare the above figure with and without the red dotted box).

We repurpose a diverse set of established datasets focusing on reasoning, coding, and decision-making and carefully curate them into a compact subset for efficient evaluation.

🛠️We evaluate 20 LLMs, where 4 are closed- and 16 are open-source. We cover different sizes and three training techniques: 1️⃣pre-trained model (Base) 2️⃣supervised instruction-finetuning (SIFT) 3️⃣reinforcement learning from human feedback (RLHF).

📊 Our Findings on Tool-augmented Task-Solving capabilities of LLMs

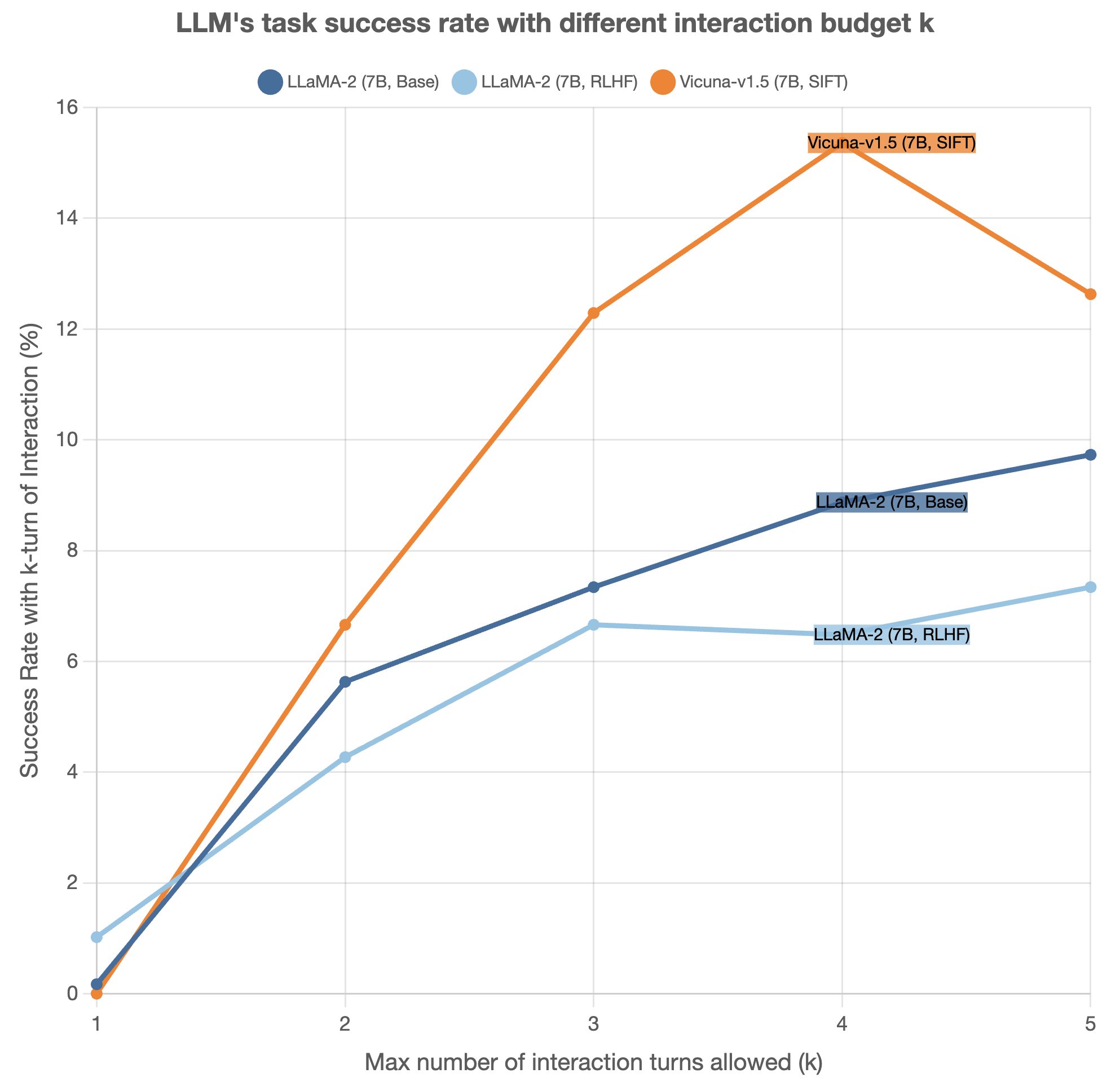

We find all open-source models (only 4 are visualized) fall behind most commercial closed-source models in both success rate at k=5 and improvement rate (slope).

SIFT on multi-turn data can potentially be helpful. Vicuna-v1.5 (7B), which is a SIFT variant of LLaMA-2 trained on ShareGPT conversations (most are multi-turn), exhibits stronger performance compared to LLaMA-2 (Base and RLHF).

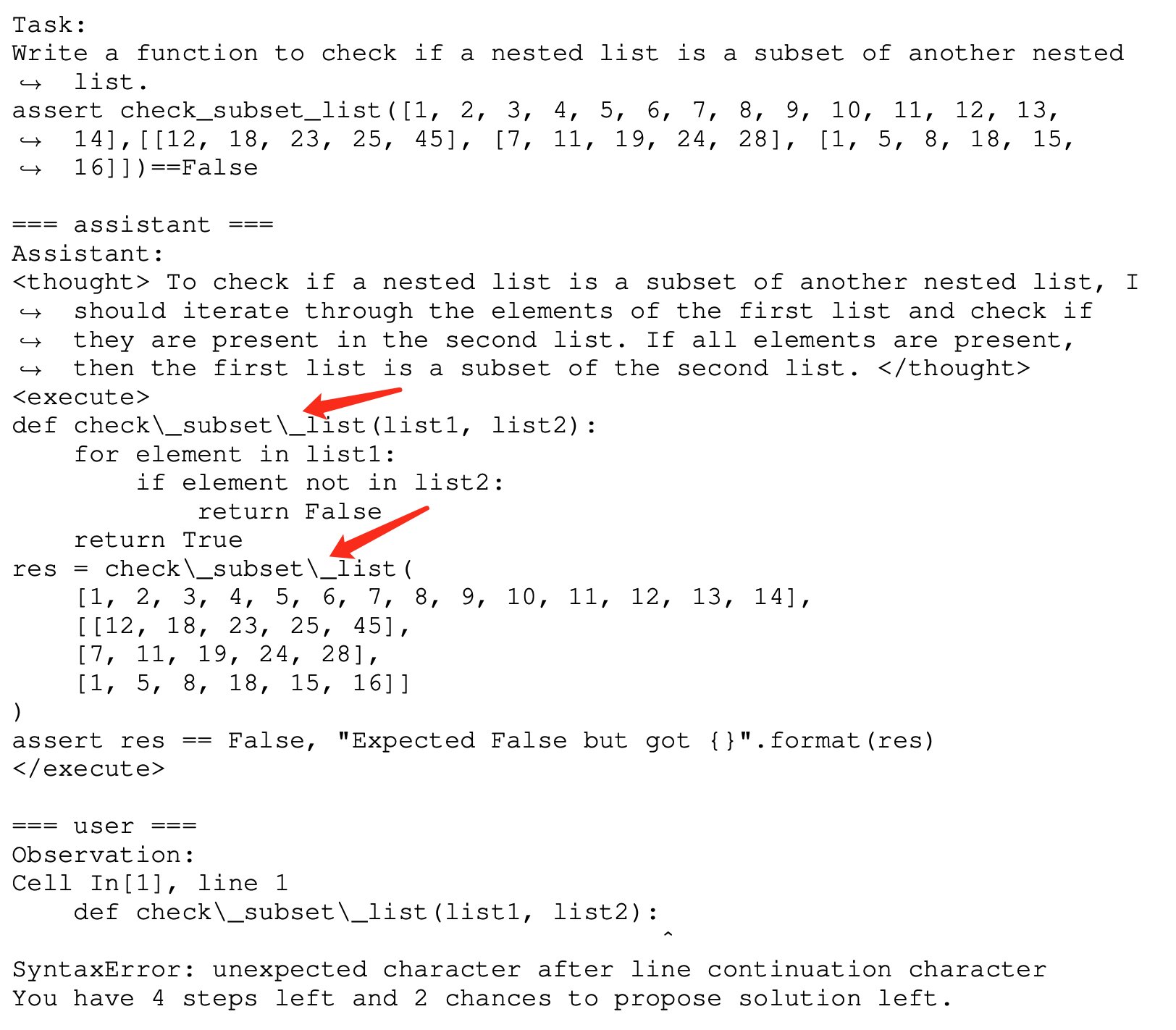

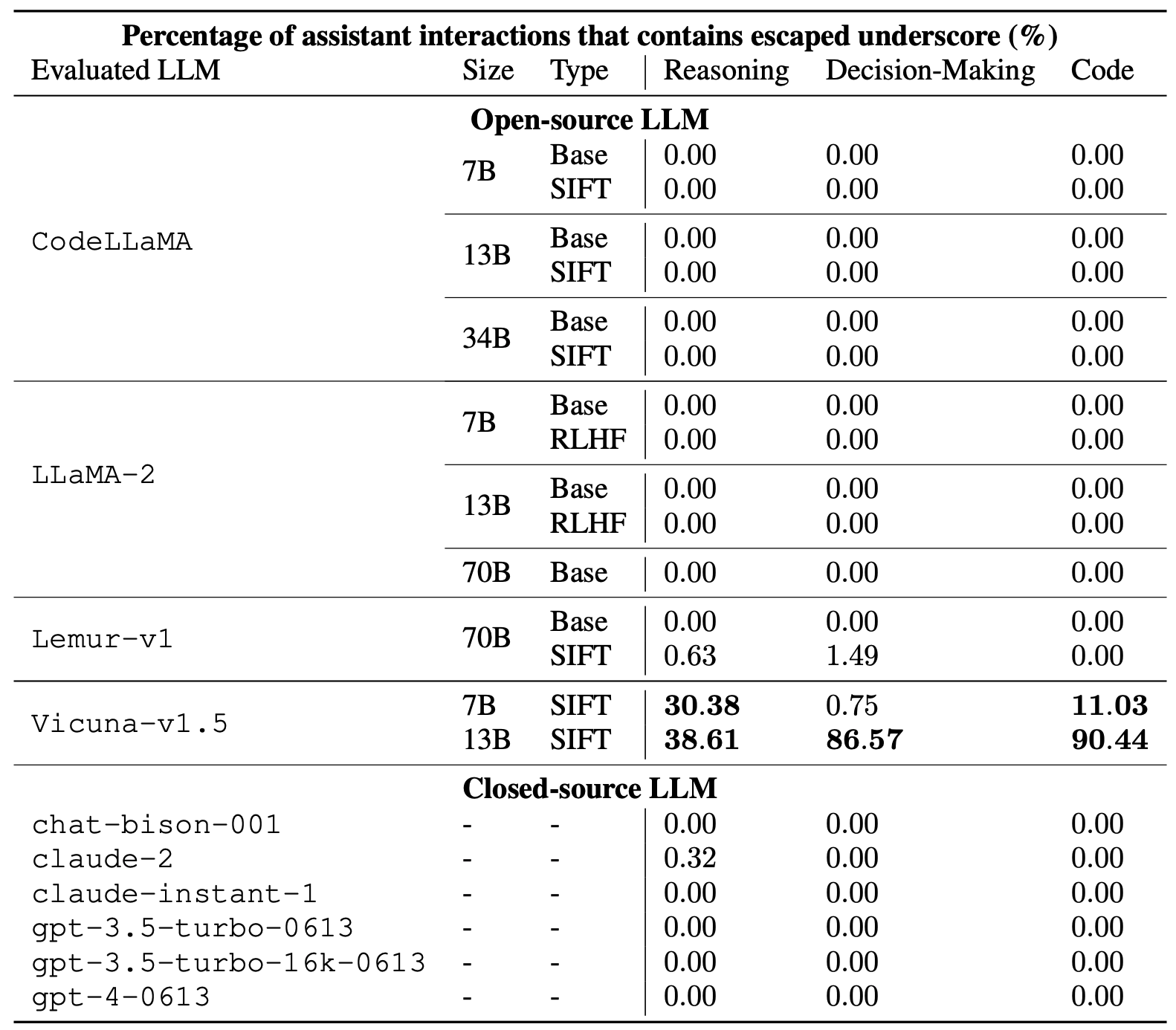

❗Surprisingly, Vicuna-v1.5 13B (trained on ShareGPT) performs worse than 7B! It produces escaped underscores “_” that hurt performance (more severe on 13B). We can trace “_” in ~15% of 94k ShareGPT data! A similar issue was noted in CodeLLaMA-Instruct; see paper for details.

Furthermore, we find RLHF hurt LLM-tool multi-turn interaction on the LLaMA-2 series. However, it’s unclear if RLHF is problematic overall or if RLHF only hurts when applied to single-turn data (the case of LLaMA-2).

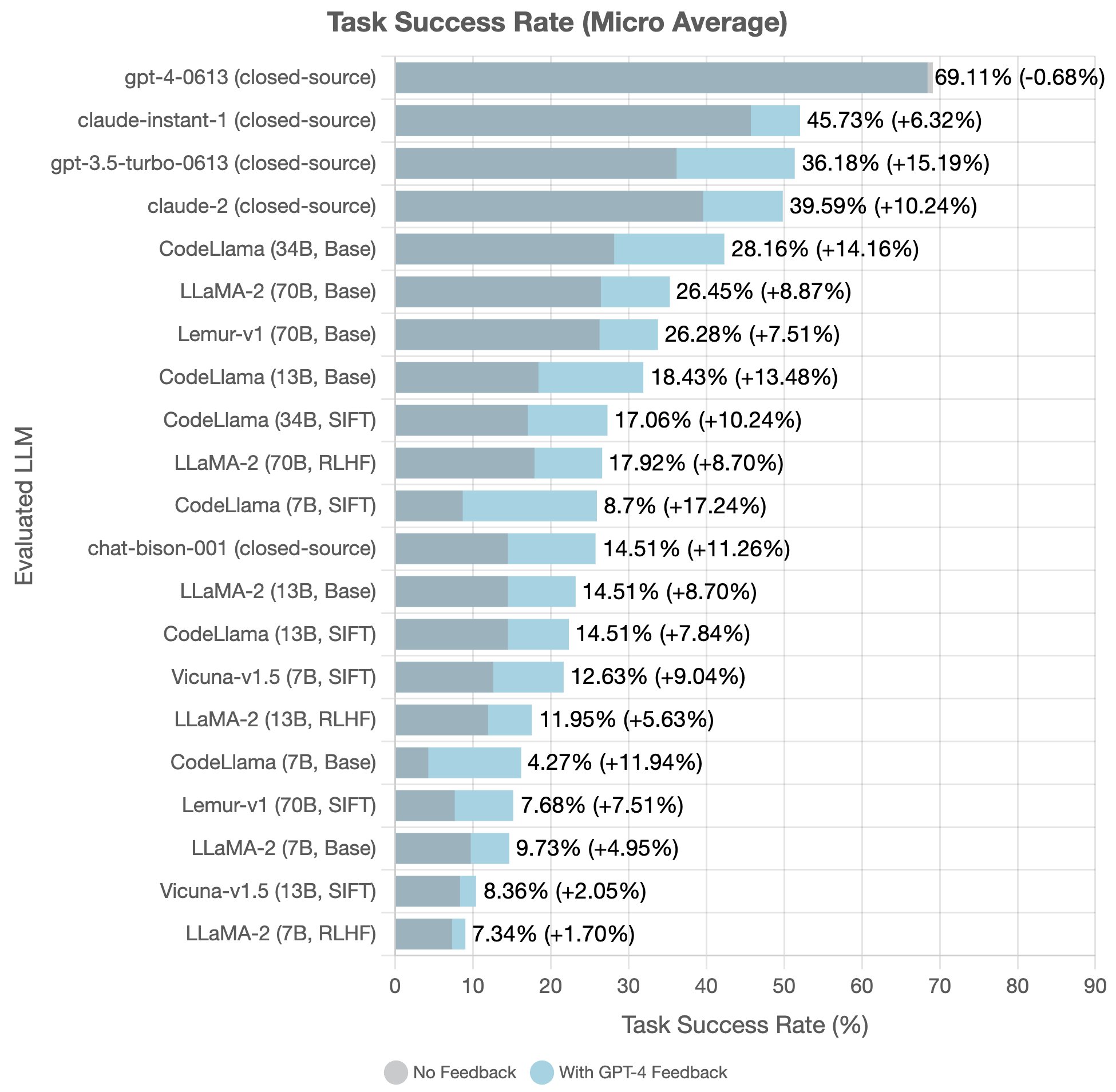

📊 Findings on LLMs’ Ability to Leverage Natural Language Feedback

We find no significant difference between open- and closed-source models in Δfeedback (performance gain due to feedback).

Similar to the case of LLM-Tool interaction, we find that SIFT and RLHF hurt models’ ability to leverage feedback. The results on CodeLLama (except 7B), LLaMA-2, and Lemur-v1 show that SIFT/RLHF models all have lower Δfeedback and success rate, compared to their base variants.

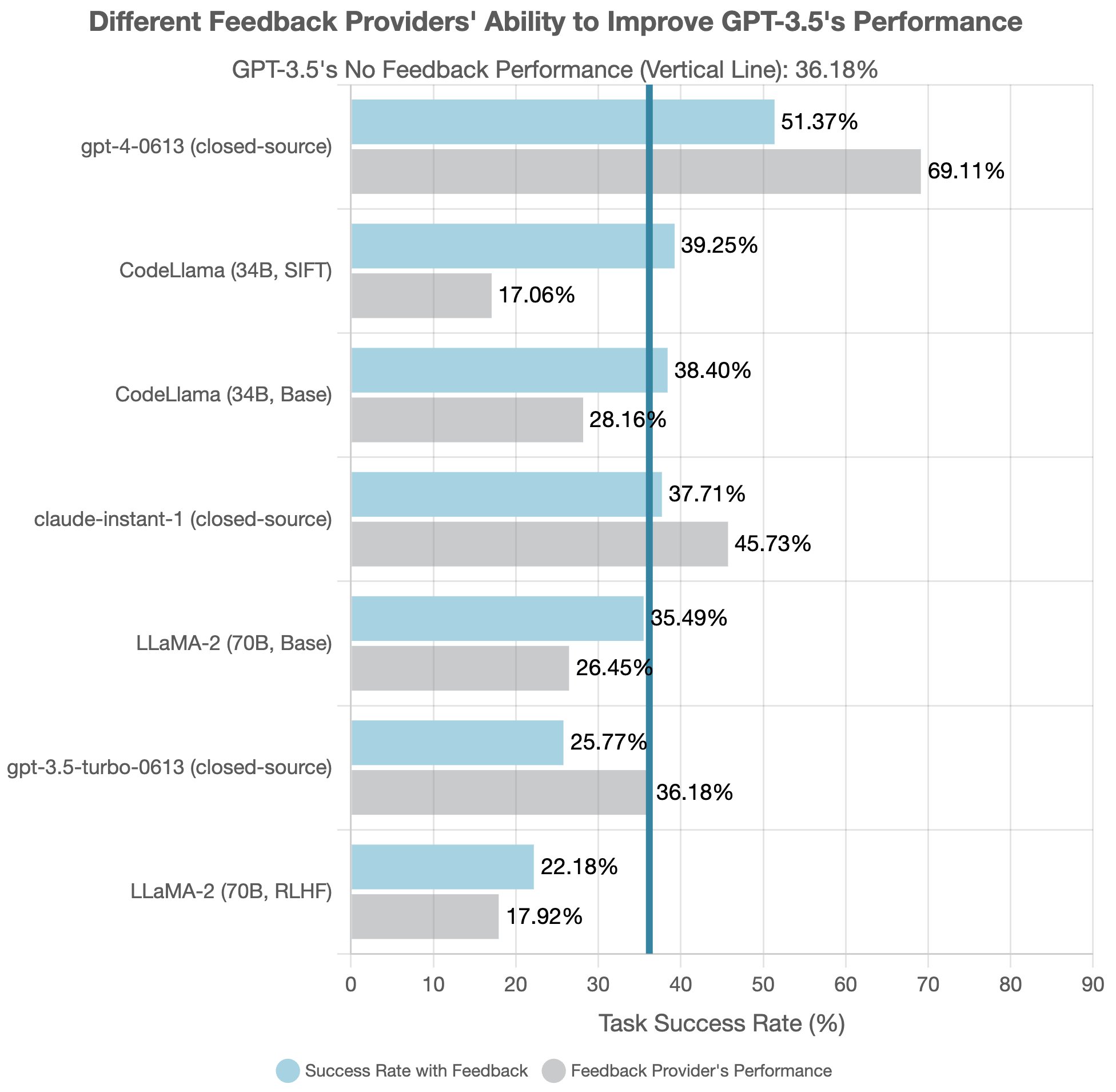

📊 Findings on LLMs’ Ability to Provide Natural Language Feedback

We can assess LLMs’ effectiveness as feedback providers by using different LLMs to provide feedback to a fixed LLM (gpt-3.5-turbo-0613).

We find that task-solving ability could be orthogonal to feedback-providing ability: higher task-solving performance does not necessarily translate to better feedback-providing capability and vice versa.

For example, GPT-3.5 excelled in task-solving but struggled with self-feedback. On the other hand, CodeLLaMA-34B-Instruct (SIFT), despite performing the poorest in task-solving (-19% difference vs. GPT-3.5), can still provide feedback that improves the stronger GPT-3.5.

Thanks for reading!

🚀 We hope MINT can help measure progress and incentivize research in improving LLMs’ capabilities in multi-turn interactions, especially for open-source communities where multi-turn human evaluation has been less accessible compared to commercial LLMs.

Please check out our preprint and project website for more details!

- Website (interactive visualization of results): https://xingyaoww.github.io/mint-bench/

- Code: https://github.com/xingyaoww/mint-bench

- Paper: https://arxiv.org/abs/2309.10691

Original thread:

We often interact with Large Language Models (LLMs) like ChatGPT in multi-turn dialogues, yet we predominantly evaluate them with single-turn benchmarks. Bridging this gap, we introduce MINT, a new benchmark tailored for LLMs' multi-turn interactions. 🧵 pic.twitter.com/lJqdUr9yap

— Xingyao Wang (@xingyaow_) September 21, 2023

Enjoy Reading This Article?

Here are some more articles you might like to read next: